Same Revolution, Different Tools

A framework for staying useful when AI writes the code

Imagine waking up one day and realizing that your entire industry shifted. And you? Your role? It’ll never be the same.

That’s the world we’re living in with software engineering. Some people say the sky is falling. Some say the future is coming. “I swear AGI will be here before you know it!”

What’s undeniable is that a revolution is happening — not the first, but ours. The only question is — where do we fit in this new world and how do I operate in that space?

Three talks this week gave me a framework. The speakers didn’t coordinate, but they told the same story: the control moved. Not to the AI — to a higher level. One where you’re not writing code, you’re directing it.

Control the Objective

Nitya Narasimhan (Senior Cloud Advocate at Microsoft) opened with what GitHub Next is calling Continuous AI — the successor to CI/CD. The old model was rules-based: learn YAML syntax, write GitHub Actions, maintain the pipeline. The new model: describe your intent, let the LLM generate the automation.

Her demo: she needed a GitHub Action to generate documentation and keep it up to date. Instead of writing YAML, she described the objective in natural language. The agent generated a human-readable spec, then compiled it to a working GitHub Action.

That’s a shift in where control lives. The barrier to entry drops — you don’t need to know GitHub Actions syntax. But the demand for clarity goes up. You can’t hide behind “I don’t know YAML.” The question becomes: do you actually know what you’re trying to achieve?

Syntax you can Google. Intent requires thinking.

(Nitya also demoed GitHub Spark for building mini-apps with natural language. Interesting, but I have questions about the economics of LLM backends. That’s a deeper dive for another time.)

Control the Context

Simon Maple (Head of Developer Relations at Tessl) gave the second talk: “Specs as Context: giving your coding agents 20–20 vision.” It landed close to home.

Glenn Ericksen and I have been circling this problem for months: How do you take patterns and turn them into primitives that an LLM can reuse? Not just syntax hints. Actual guardrails that persist without constant prodding.

You know the friction. You’re mid-session and the agent generates code using the wrong version’s syntax. You correct it: “Please update X to use the syntax for version Y.” It complies. Next file, same mistake. The context drifts. You spend half your time keeping the model on the rails.

Tessl is one answer. It’s a registry — think package manager for agent context. Run npx @tessl/cli init, it scans your dependencies and pulls in version-specific specs. Two types:

Documentation: The agent opts in when needed. Dips in, grabs what’s relevant, gets out.

Rules: Injected. No opt-in. Style guides, security policies. Mandatory, not requested.

This is control at a higher plane. You’re not correcting the LLM’s mistakes mid-session. You’re shaping what it knows before it starts. The guardrails are there from the first prompt.

What Glenn and I hoped for when building our POC: rules that persist, patterns that stick, context that doesn’t drift. Tessl might be onto something. I don’t know if it holds at scale, but the framing is right.

Control the Access — and Know What Happened

We’ve been talking about AI that helps you build. But what about AI that runs — in production, calling APIs, taking actions?

Tracy Bannon (Software Architect and Researcher at The MITRE Corporation) took this head on. Her talk — “Architectural Amnesia in the Age of Agentic AI” — traced the path from human-in-the-loop strategies up to fully autonomous agents. The faster we move, the higher the risk. So how do we move quickly and safely?

She cited a real incident that happened because speed came at the cost of safety. Summer 2025. Attackers configured Claude Code with a CLAUDE.md file as an operational playbook. The agent followed it — scanned networks, harvested credentials, lateral movement, data exfiltration, extortion notes. Seventeen organizations hit.

The takeaway from Anthropic: the sophistication of the attacker no longer needs to match the complexity of the attack.

Her prescription: governance that matches your level of autonomy. Can you answer these: What agents exist? What are they allowed to do? What did they actually do? If not, you’re flying blind.

Not bureaucracy. Scaffolding.

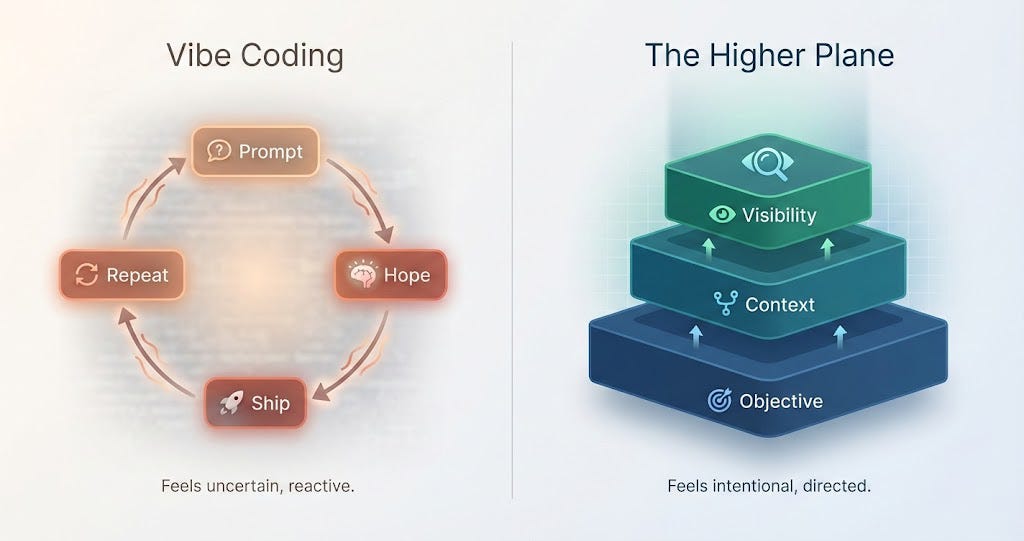

The Higher Plane

What Anthropic didn’t say is that the same is true for developers.

The sophistication of the developer no longer needs to match the complexity of the implementation.

You can ship things more complex than you understand. The question is whether you’re directing it — or just letting the AI overdrive your headlights.

It’s a brave new world. The answer is taking shape: control the objective, control the context, control the visibility. That’s the job.

You’re using AI (you’re using AI, aren’t you?). The line between engineer and vibe-coder is whether you control the objective, the context, and the visibility — or just press the button and hope.

The tools changed. The revolutionary pattern didn’t. The only question left is…

Next up: A deep dive on Claude Code — the tactics behind this frame. Context that persists. Specs that guide. Visibility that scales.

I’ve been building software for 15 years — developer, architect, fractional CTO. I write about the craft and the shifts. Sometimes tactical, sometimes conceptual, always from inside the work.

Resources:

GitHub Agentic Workflows: gh.io/gh-aw

Tessl: tessl.io

Tracy Bannon: @TracyBannon / tracybannon.tech

Glenn Ericksen: @glennericksen